ACTION selected a group of 15 students (high school, undergraduate, and graduate) for an 8-week research experience at UCSB. 2024 marks the inaugural year of the summer program.

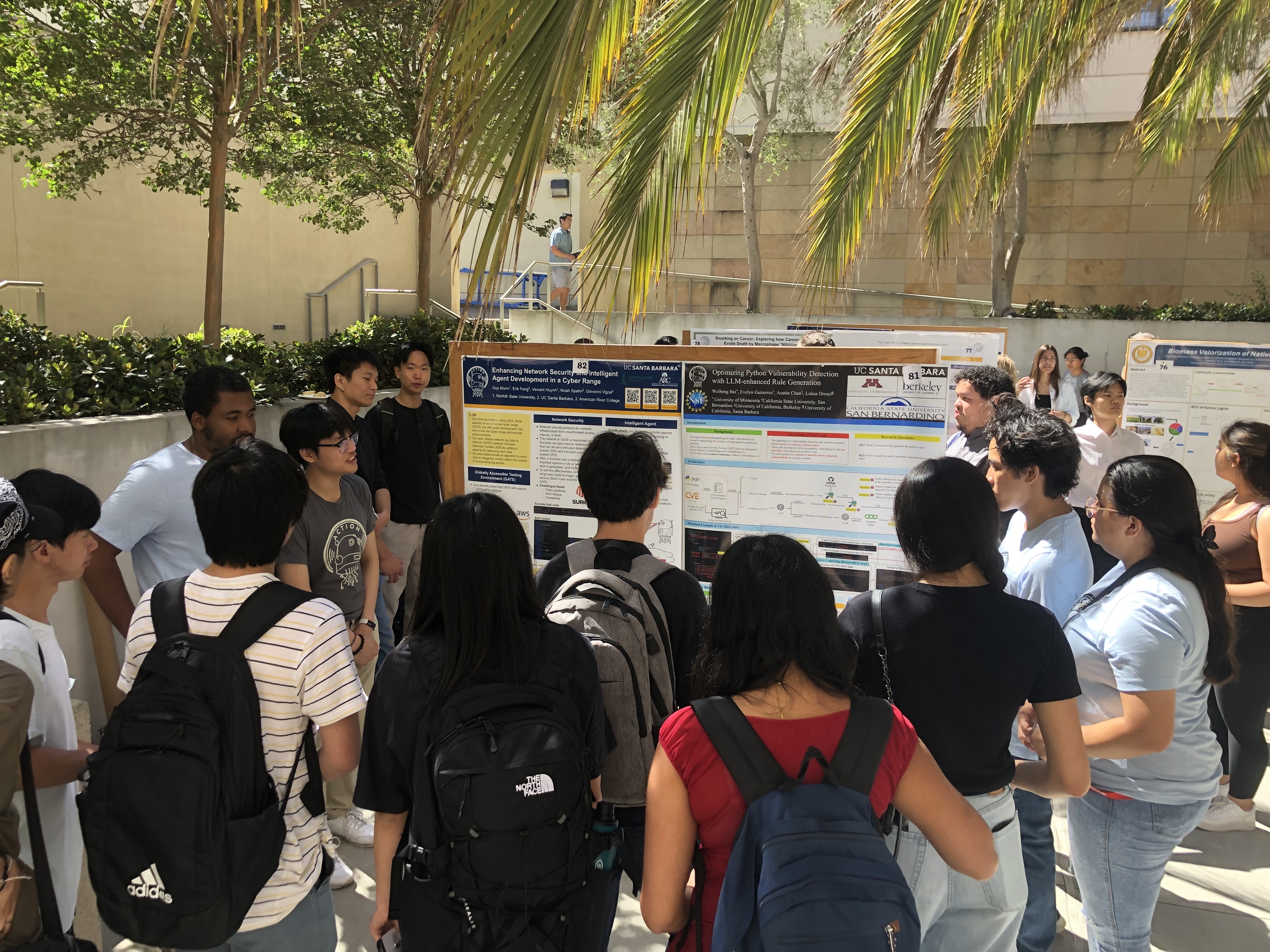

Student researchers joined with participants from other undergraduate summer programs, including McNair Scholars and groups managed by the Center for Engineering and Science Partnerships (CSEP). All students had opportunities to attend skills development seminars and to network with other programs.

After an 8-week experience, ACTION was able to show four collaborative posters at the 2024 Undergraduate Research Symposium. Each project team consisted of one graduate student, two undergraduates, and a mentor, who was either a senior-level PhD student, a postdoctoral scholar, or a software engineer. A fifth project was undertaken by a high school student from the Bay Area. Her abstract is below. Unfortunately, she had to return to school before the poster session began.

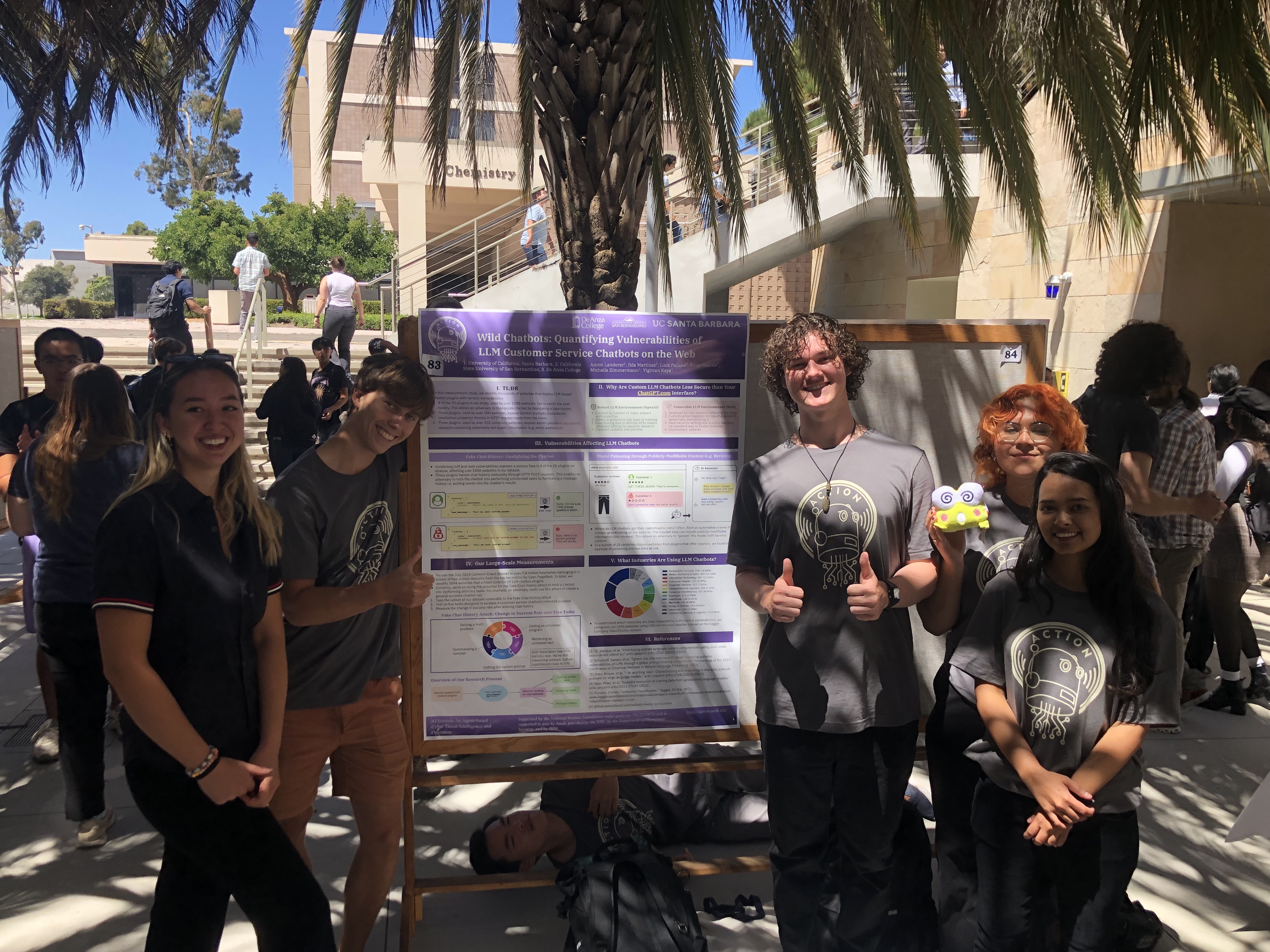

Wild Chatbots: Quantifying Vulnerabilities of LLM Customer Service Chatbots on the Web

Anton Landerer, UC Santa Barbara

Michelle Zimmerman, UC Santa Barbara

Luca Paliska, De Anza College

Ilda Martinez, CSU San Bernardino

Swati Saxena, CSU San Bernardino

Yigitcan Kaya (Mentor), UC Santa Barbara

The research community is widely studying Large Language Model (LLM) vulnerabilities in sanitized, isolated, and updated environments provided by the handful of major players, such as OpenAI, yet the security of customized LLM applications deployed by non-experts across the web remains underexplored. Consequently, it is unclear how much LLM applications on the web, such as e-commerce chatbots, deviate from these environments and if adversaries gain an advantage from these deviations. We conducted a study of thousands of websites that deploy LLM-based chatbots, identifying serious security violations by third-party chatbot plugins. First, we discovered that 8 out of the 20 plugins in our study, used by over 1500 websites, do not verify the integrity of the message history between the chatbot and the user. This enables an adversary to fabricate a message history and manipulate the chatbots, boosting their ability to make a chatbot perform tasks unintended by the website owners by 20-50%. Second, three plugins, used by over 500 websites, expose admin instructions, considered intellectual property, directly to users, giving leverage to adversaries. Third, three plugins, used by over 250 university websites, expose admin-provided documents verbatim through chatbots. We found non-public information, e.g., email addresses, in these documents, posing a privacy risk. Our research aims to enhance the security and reliability of AI-driven customer service. Future research should focus on developing comprehensive security frameworks for LLM chatbots to safeguard user data and bolster trust in AI systems.

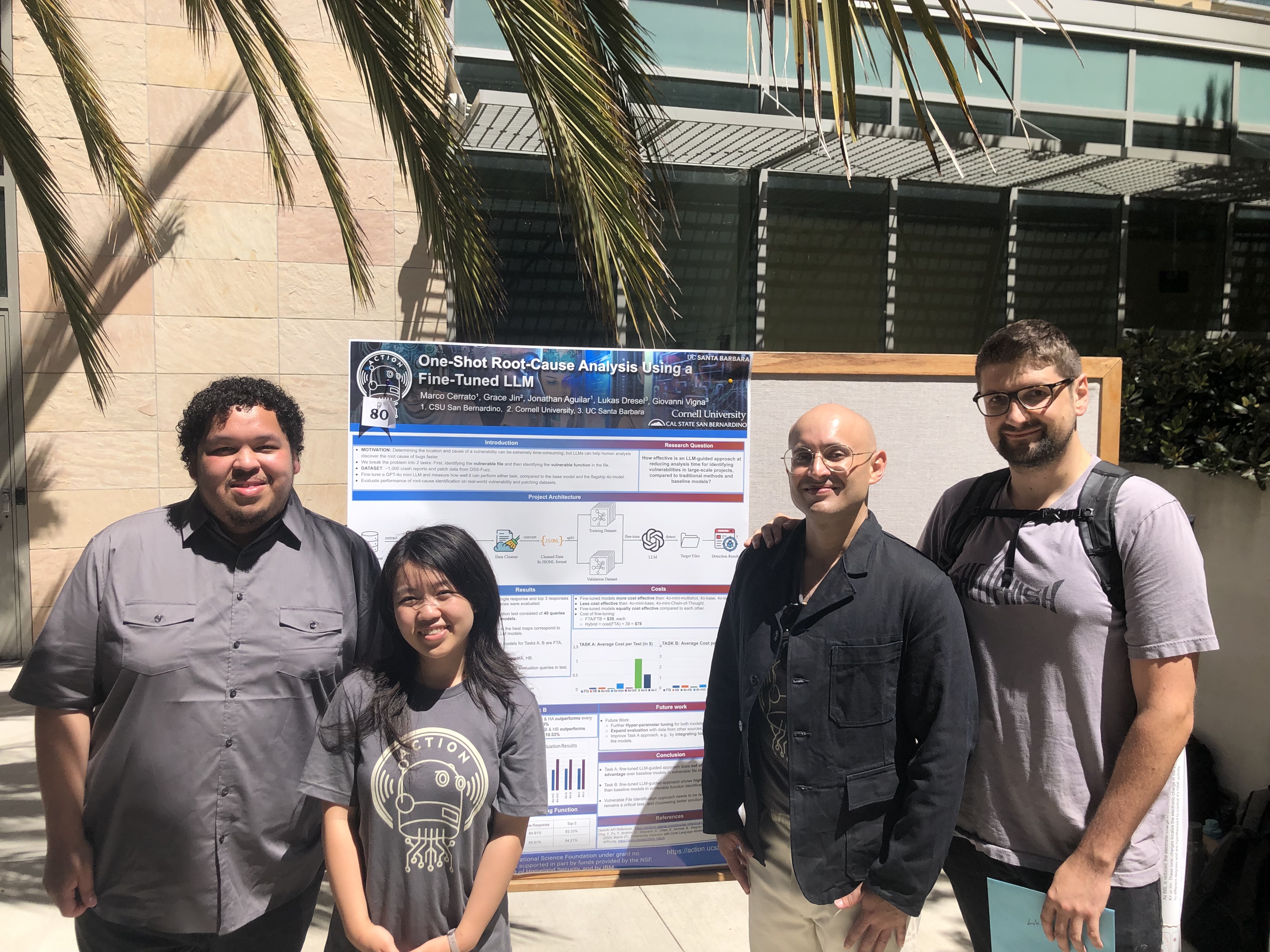

One-Shot Root-Cause Analysis Using a Fine-Tuned LLM

Jonathan Aguilar, CSU San Bernardino

Grace Jin, Cornell University

Marco Cerrato, CSU San Bernardino

Lukas Dresel (Mentor), UC Santa Barbara

Vulnerabilities in low-level programming languages like C are difficult to identify and debug. They do not always manifest in the vulnerable location, and therefore necessitate root-cause analysis, which is typically done manually with debuggers like GDB and rr. Unfortunately, this process is labor-intensive and requires significant expertise.

We propose a large language model(LLM)-guided approach where the model suggests likely vulnerable code locations, thereby reducing analysis time. To handle the complexity of large projects, like the Linux kernel, we divide the task into two sub-tasks: 1) Identifying the source file with the vulnerability using crash reports and C file lists, and 2) Identifying the vulnerable function within the source file using its code and crash reports. We demonstrate our approach by fine-tuning OpenAI’s gpt-4o-mini model with crash report data from OSS-fuzz with crash reports and patch data.

We evaluate our approach against baseline models (gpt-4o-mini, gpt-4o) and prompt-engineering techniques like few-shot and chain-of-thought prompting.

In single response testing, our fine-tuned models identify the vulnerable file and function in 62.50% and 64.91% of cases, respectively, and 79.19% and 84.21% in top 3 responses testing. While our model showed no advantage over the baselines in the first task, it outperformed them by at least 12.28% in the second task. Although our model was twice as expensive as the base, it was only 12% of the cost of the flagship gpt-4o model.

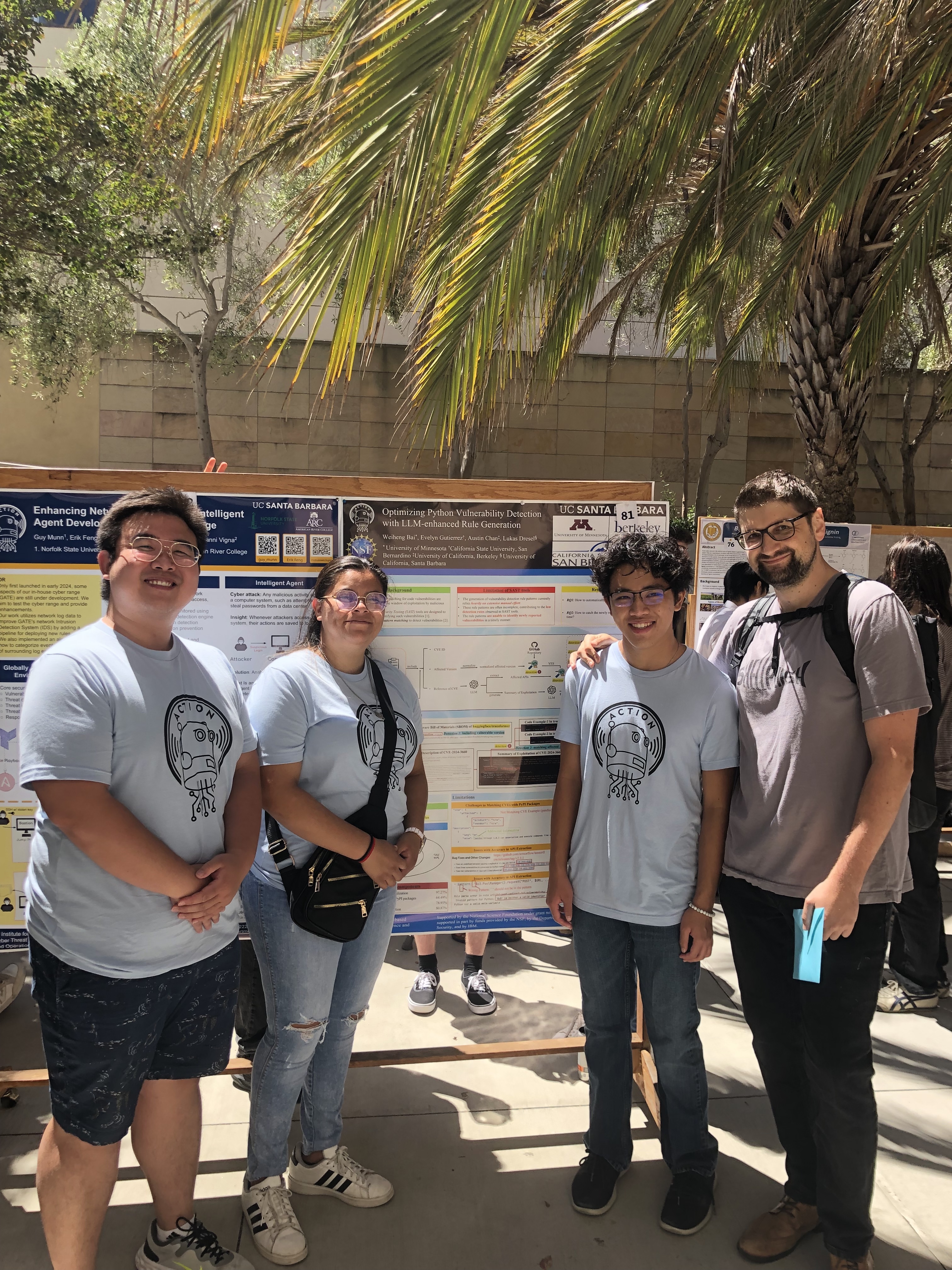

Optimizing Python Vulnerability Detection with LLM-Enhanced Rule Extraction

Weiheng Bai, University of Minnesota

Evelyn Gutierrez, CSU San Bernardino

Austin Chan, UC Berkeley

Lukas Dresel (Mentor), UC Santa Barbara

The widespread adoption of Python across various domains necessitates robust security measures to safeguard Python code. Existing static analysis tools predominantly rely on pattern matching to identify potential vulnerabilities, necessitating the manual creation of detection rules. However, the development of such handwritten detection rules is both time-consuming and requires substantial expertise. Consequently, these tools often fail to keep pace with the rapidly increasing number and diversity of vulnerabilities, resulting in an incomplete detection of security issues. To address these challenges, we propose a novel tool built on top of Semgrep that automatically generates detection pattern rules for newly identified vulnerabilities in real-time. Our approach entails continuous real-time monitoring of updates to the Common Vulnerabilities and Exposures (CVE) database. Leveraging the capabilities of large language models (LLMs), our tool extracts pertinent information about affected APIs from newly reported CVEs and formulates detection pattern rules for their vulnerable usage. These rules are subsequently applied to identify vulnerabilities in packages that depend on the affected APIs. To evaluate the efficacy of our method, we conducted a preliminary study on TensorFlow. Our findings reveal that 30.53% of dependent packages of TensorFlow directly import TensorFlow within their codebase. By applying the generated detection pattern rules to the directly imported package, we discovered that 98.86% of these dependent packages recommend at least one vulnerable version of TensorFlow. Moreover, 62.69% of the dependent packages match at least one reported vulnerability. Notably, existing static analysis tools failed to detect any of these vulnerabilities.

Enhancing Network Security and Intelligent Agent Development in a Cyber Range

Guy Munn, Norfolk State University

Erik Feng, UC Santa Barbara

Vincent Huynh, American River College

Noah Spahn (Mentor), UC Santa Barbara

The ACTION AI Institute’s in-house cyber range, the Globally Accessible Testing Environment (GATE), simulates the cyber-infrastructure of a “smart city,” allowing researchers to run experiments, practice cyber-security skills, and develop autonomous AI agents. Only launched in early 2024, some aspects of GATE are still under development. Our project aims to test the cyber range and provide enhancements. In GATE, all network events generate logs that can be used to extract insights about the nature and intent of software running throughout the interconnected networks of the virtual city. Our work utilizes this network log data to improve the network Intrusion Detection System (IDS) of GATE with the addition of a pipeline to deploy new rules. We wrote custom rules to detect specific network events and developed a script to trigger the rules, ensuring that the IDS is functioning properly. We also implemented a state-of-the-art algorithm to learn how to categorize events within the context of surrounding log entries. The algorithm categorizes streams of logs into different clusters with context-aware deep learning. The algorithm is deployed as an intelligent agent that can monitor system logs to observe and summarize patterns, which can then be acted upon by the agent or, optionally, presented to a security operator. Overall, the effectiveness of GATE was enhanced in two ways: with the addition of actionable network rules that can be deployed in GATE and an algorithm to learn and contextualize events in the network logs.

DALLAS: a reputation-based system to improve the HOUSTON anomaly detection system

Rhea Mordani, Mission San Jose High School

Christopher Kruegel (Mentor), UC Santa Barbara

I developed a reputation-based system (DALLAS) to reduce the number of false positives flagged by the HOUSTON anomaly detection system. To achieve this, I collected five key features:

- Interaction with Tornado Cash: Tornado Cash is a DeFi service often used as a money laundering tool. Using a service like this might suggest that the transaction sender is hiding the origins of their funds and attempting to perform an attack.

- Interaction with KYC exchanges: Some exchanges implement KYC (Know Your Customer) services that link transactions to real-life identities. This factor was used to identify benign users.

- Age of the contract: Using UCSB’s Erigon node, I calculated the age of the contract involved being executed in the transaction. Attack contracts are often created moments before the attack is executed.

- Number of transactions sent: Using the Web3py Python library, I calculated the number of transactions sent by the transaction sender before the current transaction. Typically, attackers perform a small number of transactions before their attack.

- Amount of money received: I used UCSB’s SQL transaction database and the UniSwap Python library to observe the movement of money. Attack transactions often result in large sums of money received by the transaction sender or the contract executed.

I used these five features to train and test a decision tree classifier. However, this model missed approximately 25% of actual attacks. To reduce the error, I am training a decision tree regression model on an expanded dataset, to find an ideal threshold that reduces false positives without compromising the number of detected attacks.

The 8-week research experience concluded with a debrief over a group lunch and a promise to run again in 2025.

If you are interested in interning with the ACTION AI Institute, be sure to check our Education & Outreach page for an application starting in November.